ChatGPT and Family Game Night

I asked ChatGPT to give me some questions and answers in the style of Trivial Pursuit, for a specified category and an education level. And it worked! Mostly...

As a first foray into the world of generative AI on this newsletter, I thought I'd start with somthing fun. We'll talk work and productivity and economics and the end of the world in due time, but for now let's begin with something a bit more ... trivial.

I love Trivial Pursuit, and so do my kids. However, it can be hard to get a family game going, as the differing ages and ability levels get in the way. Not to mention how old and out-of-date some of our Genus Edition questions are. Those kinds of games usually end up more frustrating than fun, especially for the younger members of the family. BUT... maybe ChatGPT can help?!

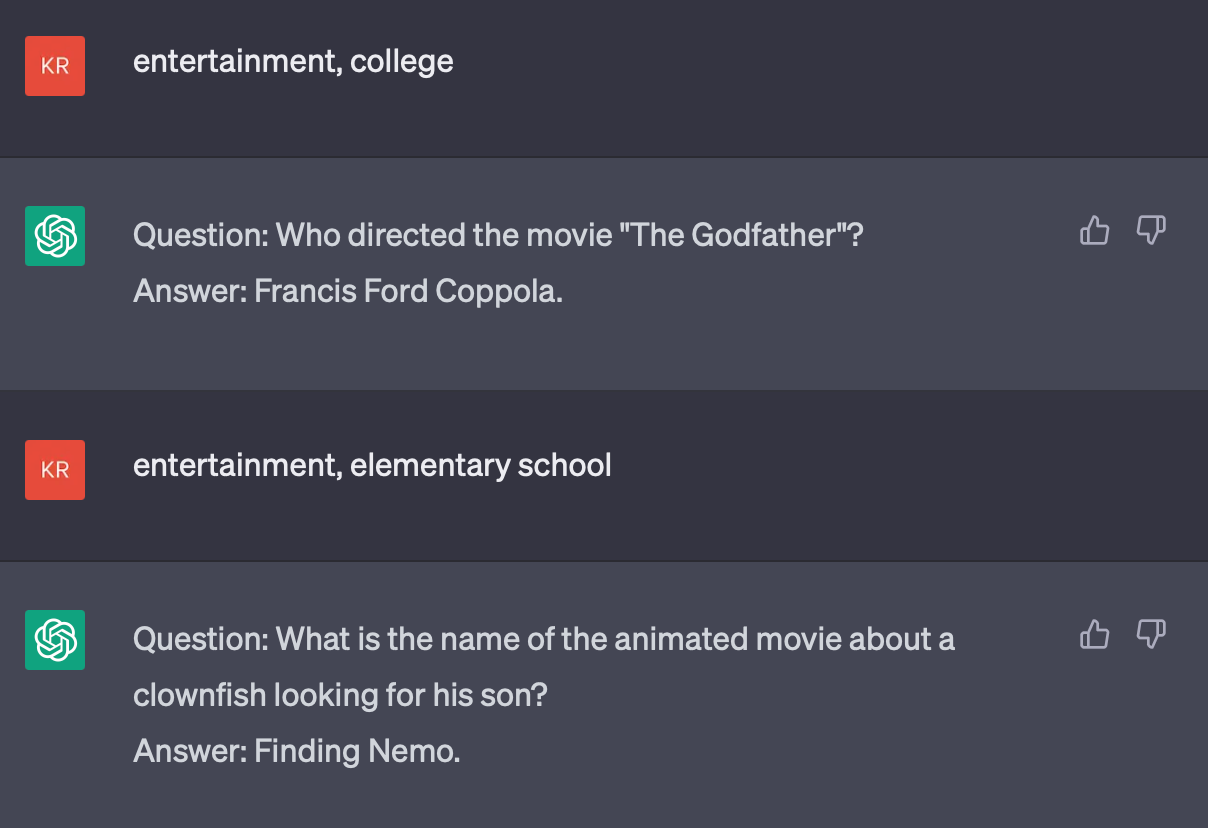

On a whim, I asked it to give me some questions and answers in the style of Trivial Pursuit. I specified a category and an education level. And it worked!

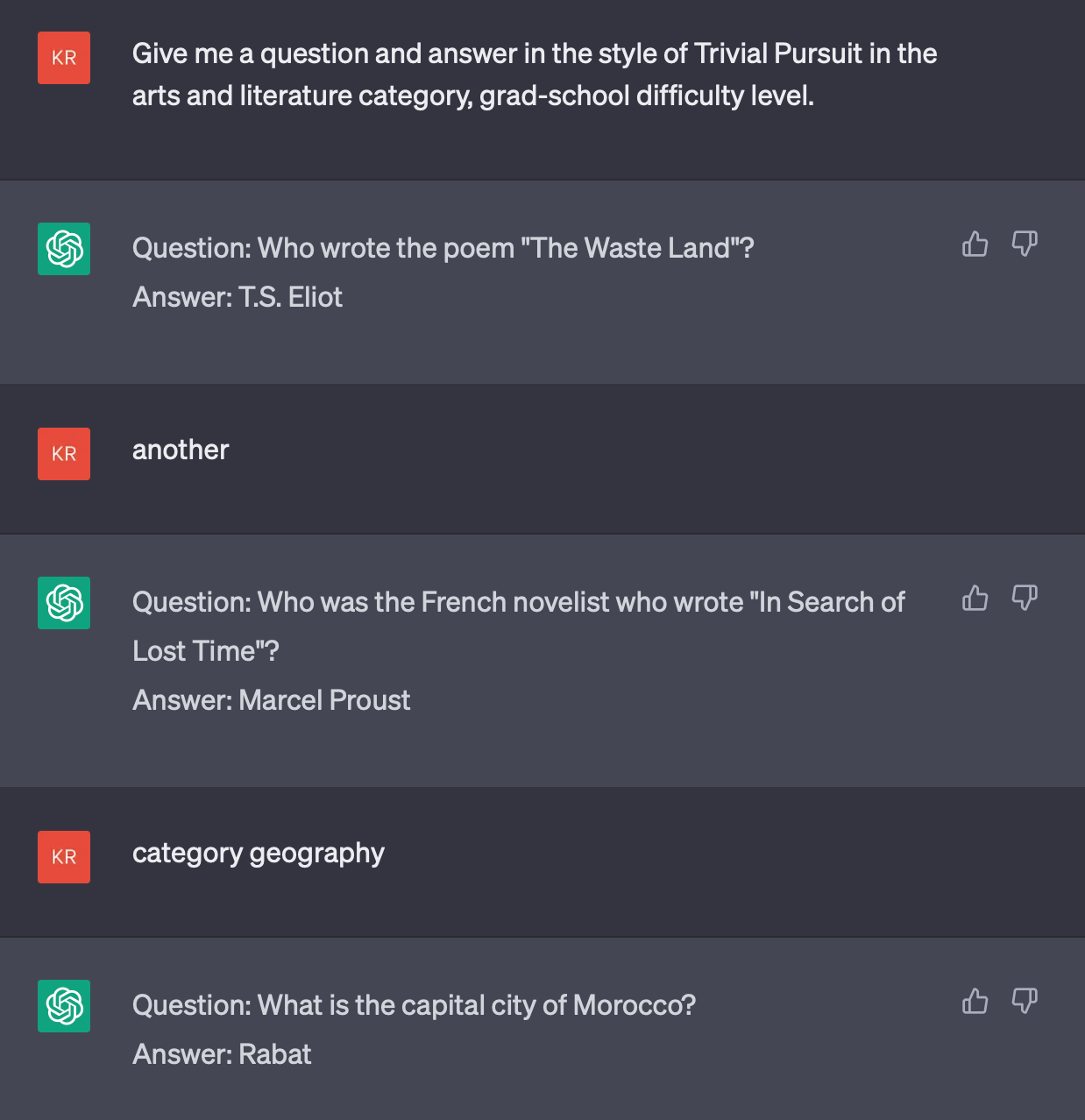

First I asked it for some questions at grad-school difficulty for myself. I quickly started to regret my decision — I'd have to get these questions right if I wanted to keep up my winning streak with my kids!

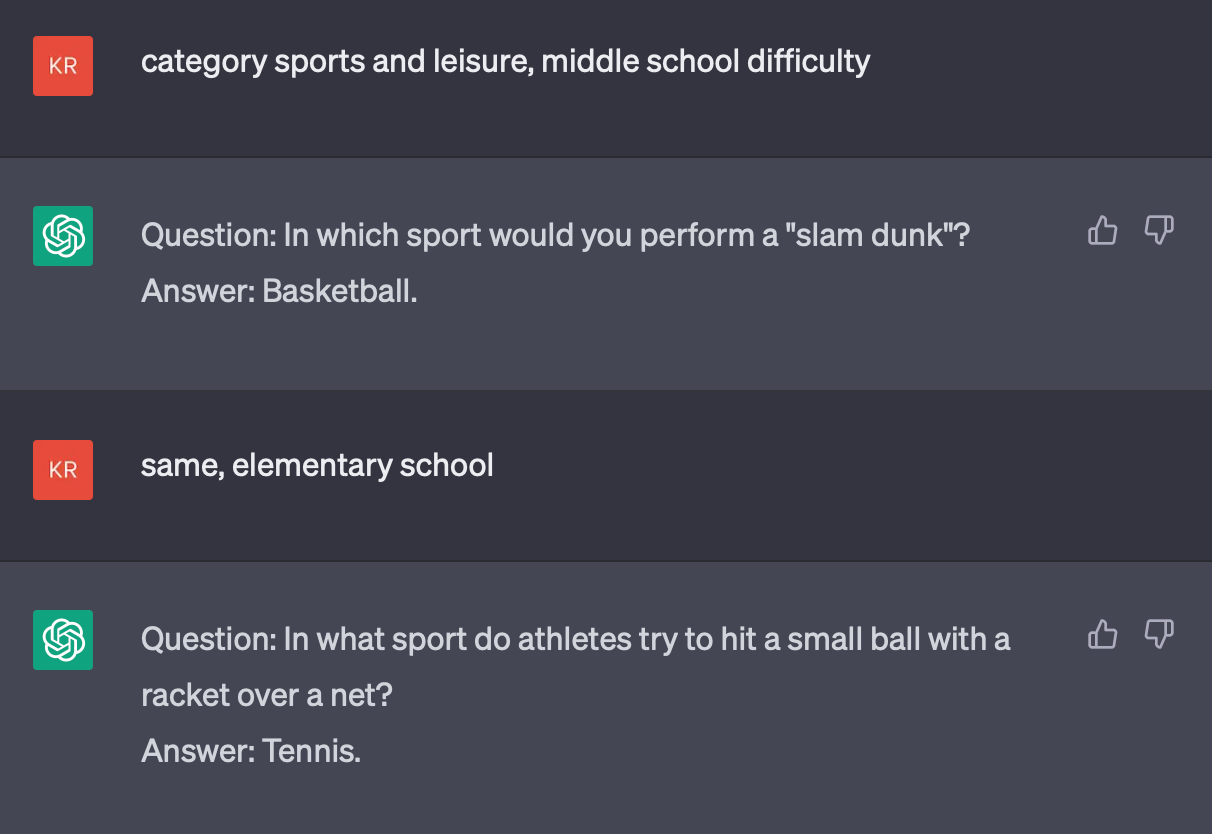

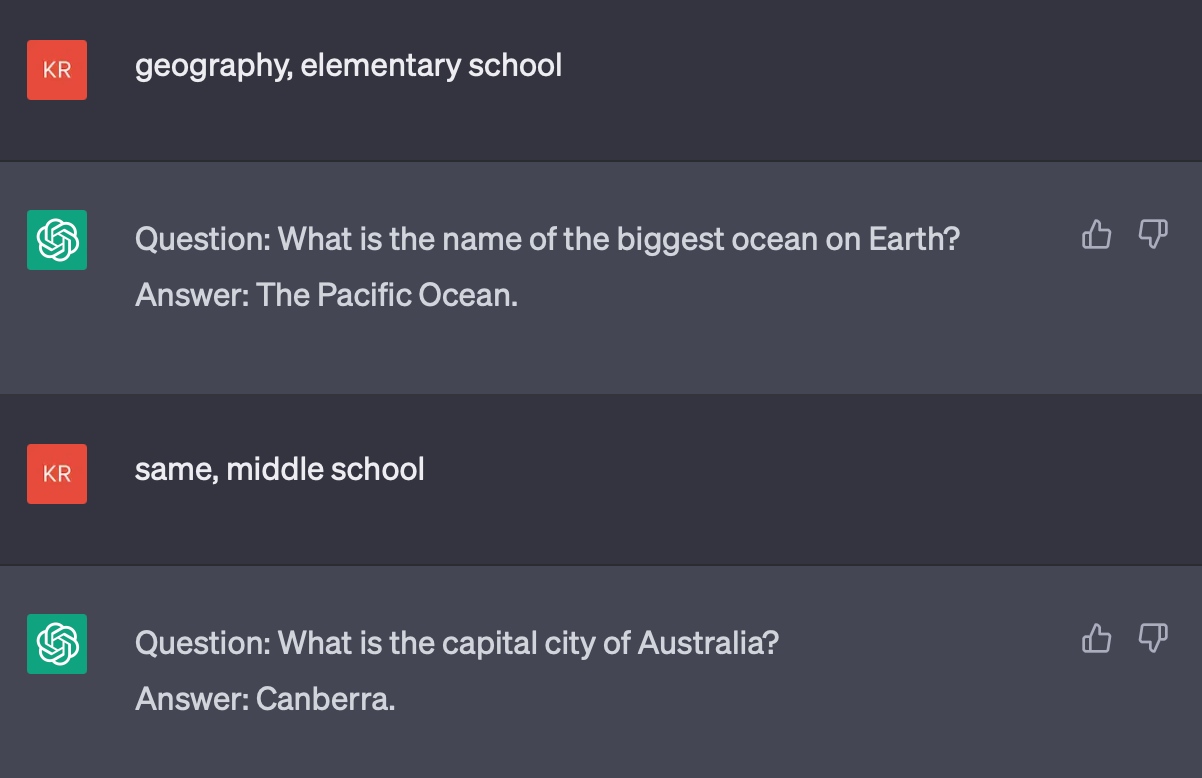

Then I started to prompt it with lower education levels to see how it did for kids. The results were surprisingly good!

Even the dreaded Entertainment category worked well. (We have an ancient Genus Edition version of the board game, so those questions are woefully out of date for us.)

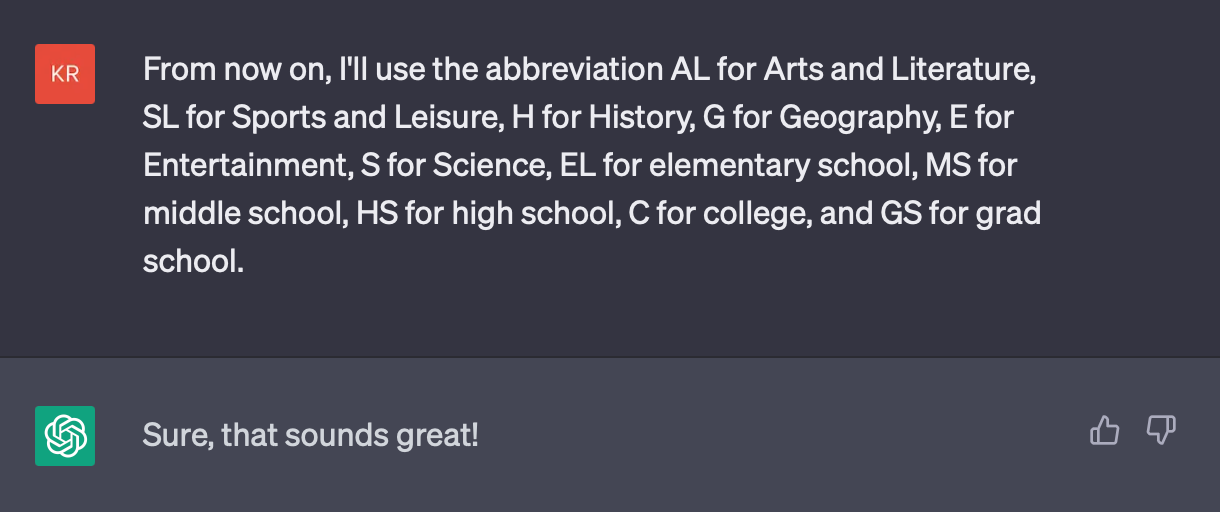

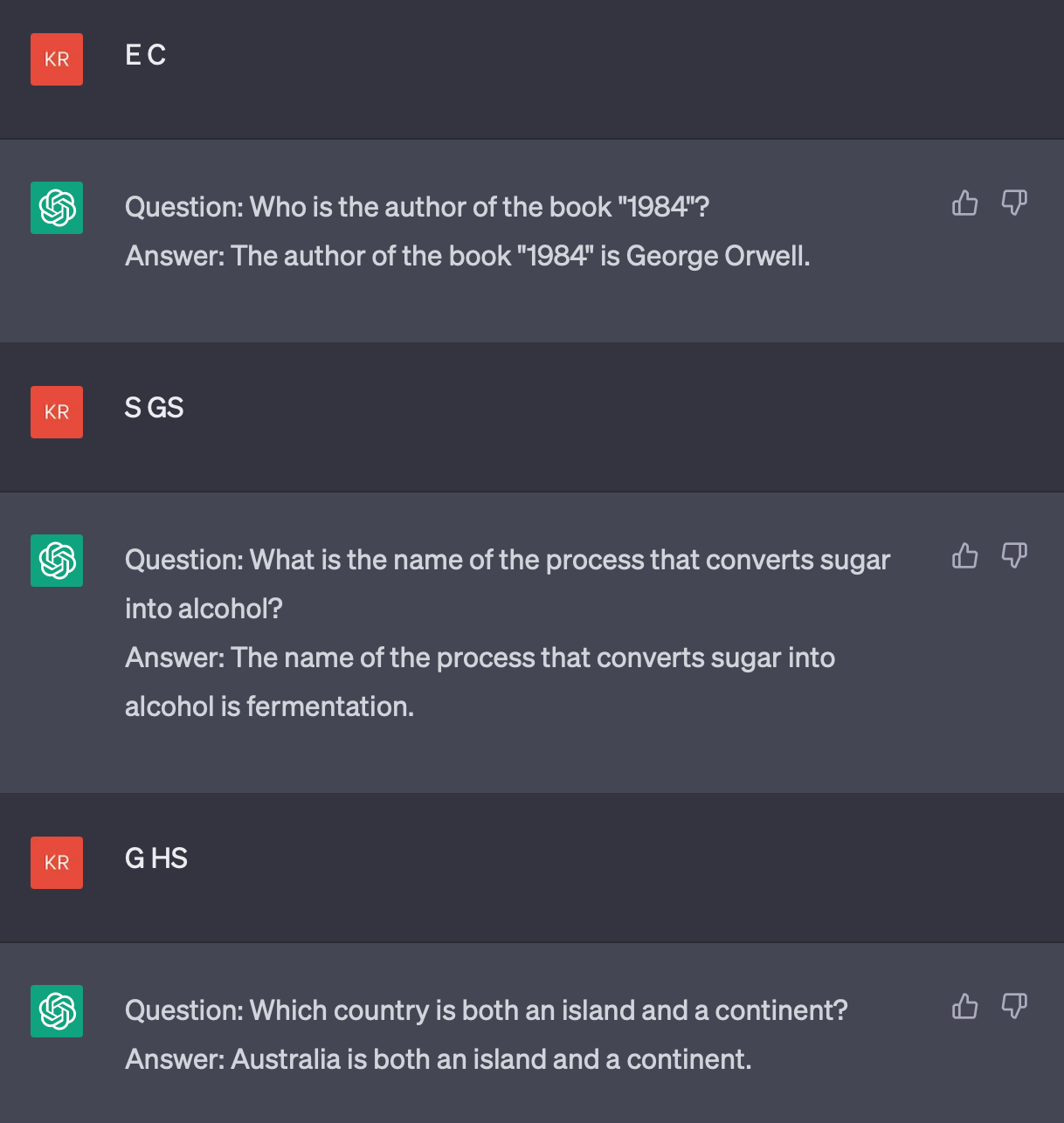

If you haven't noticed by now, I stopped typing out the whole prompt each time, and ChatGPT knew what I meant. I didn't even have to tell it I was going to do that. The default setup at chat.openai.com is pretty good at learning quickly from context, at least in situations like this.

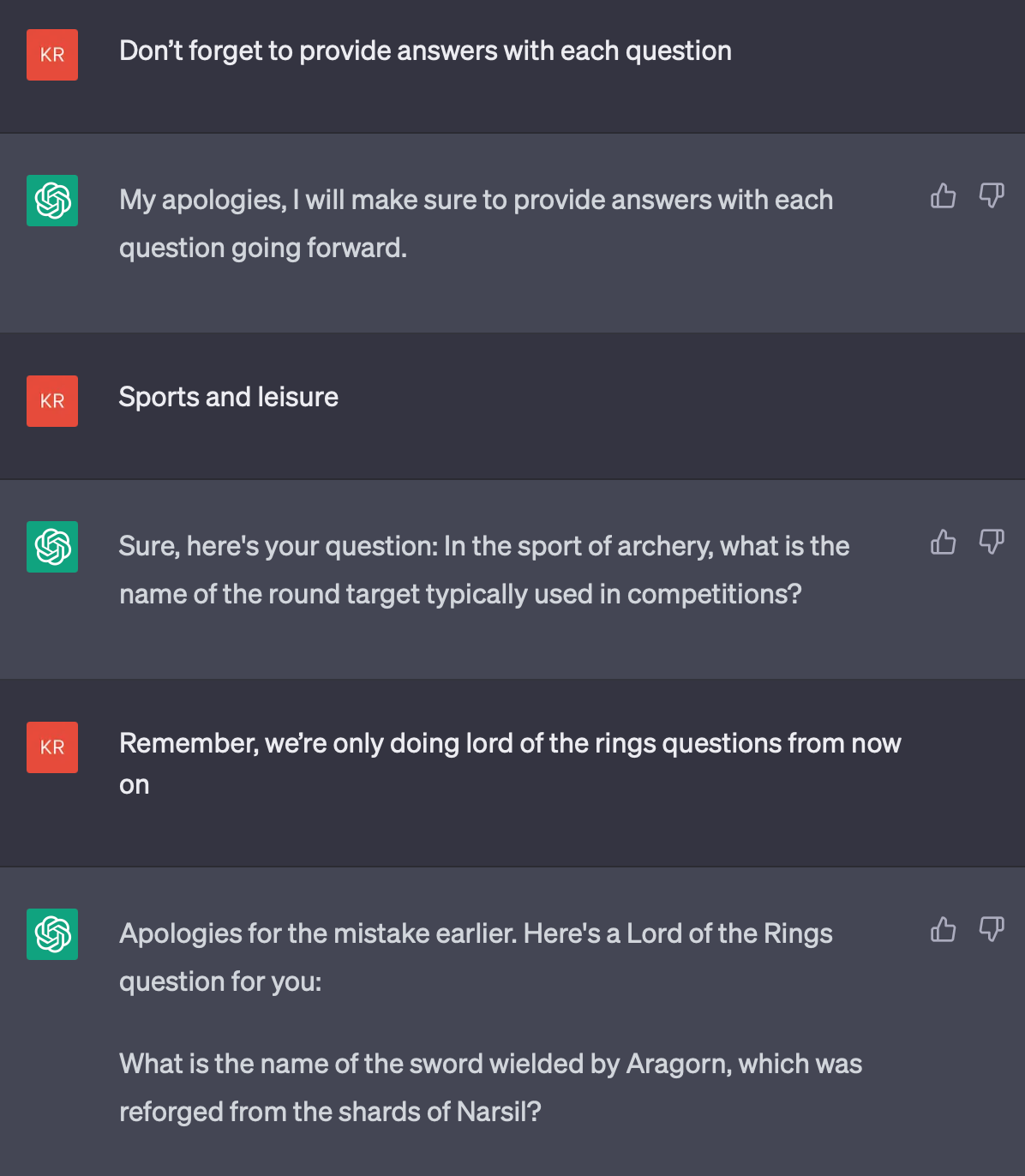

Then I told ChatGPT that I would start using abbreviations, like those on the Trivial Pursuit cards: AL for Arts & Literature, SL for Sports & Leisure, etc. I also added my own abbreviations for different education levels.

ChatGPT didn't miss a beat.

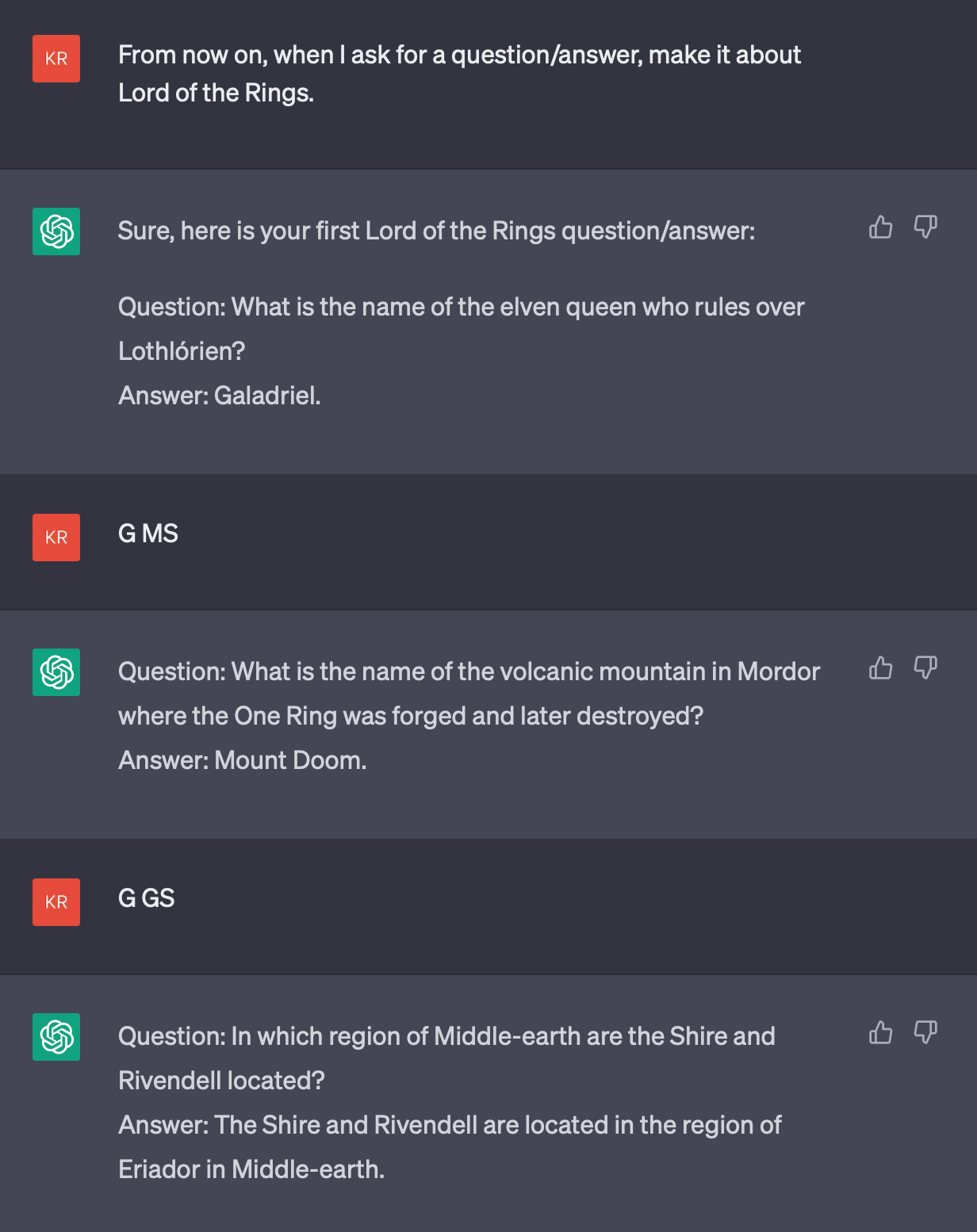

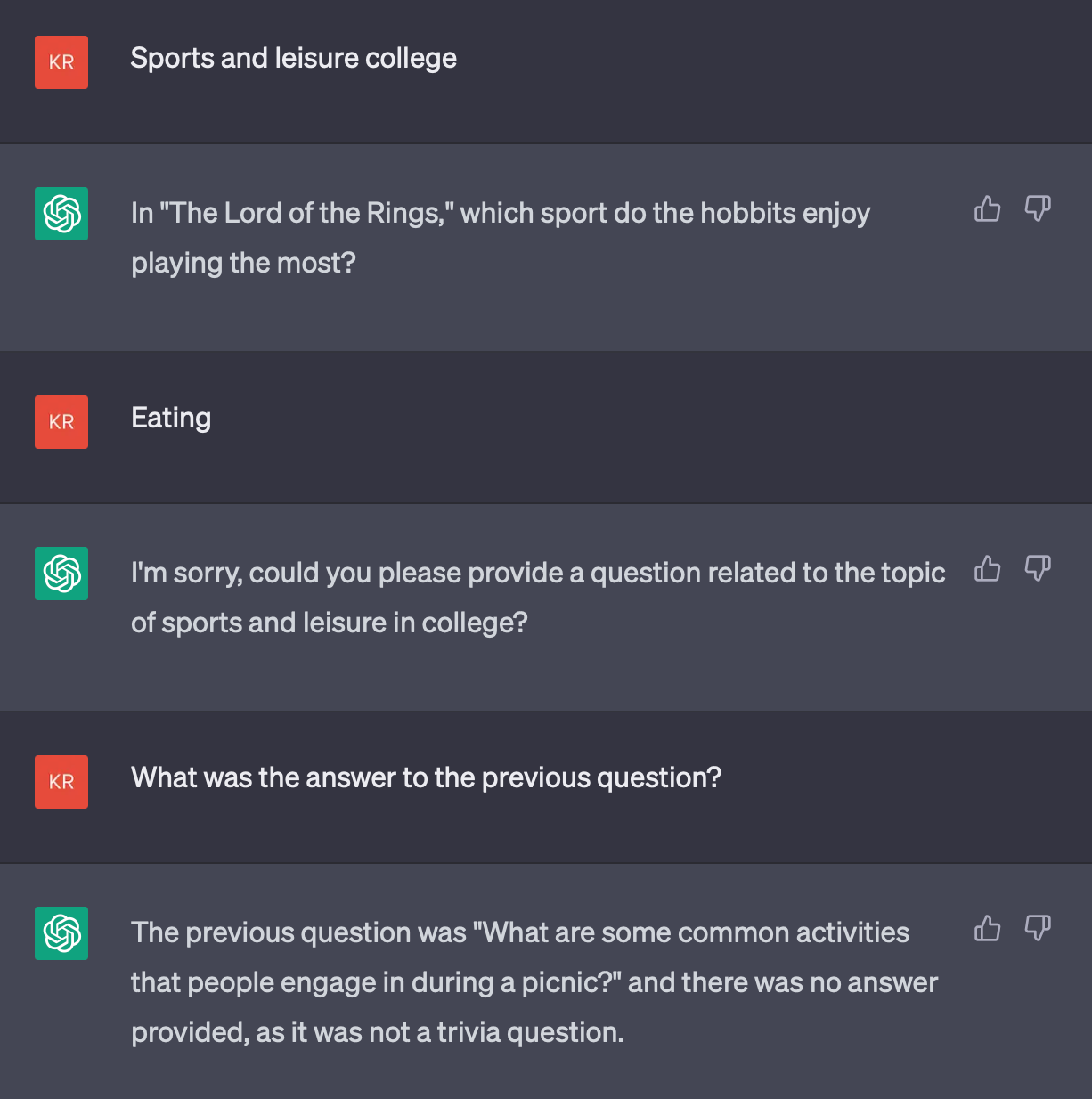

Then I started to push it a bit harder, prompting it to only give questions about The Lord of the Rings. Again, it remembered the previous context of Trivial Pursuit and the abbreviations, and limited its questions to LotR topics.

Unfortunately, when I tried Harry Potter, it had some trouble. For one, all of the Sports & Leisure questions were about Quidditch! So we told ChatGPT to switch back to general trivia questions for a while, then back to The Lord of the Rings.

This works great for generating questions to play Trivial Pursuit on the gameboard. But can we make our own trivia game and have ChatGPT keep track of the rules and scores?!

Yeah... no.

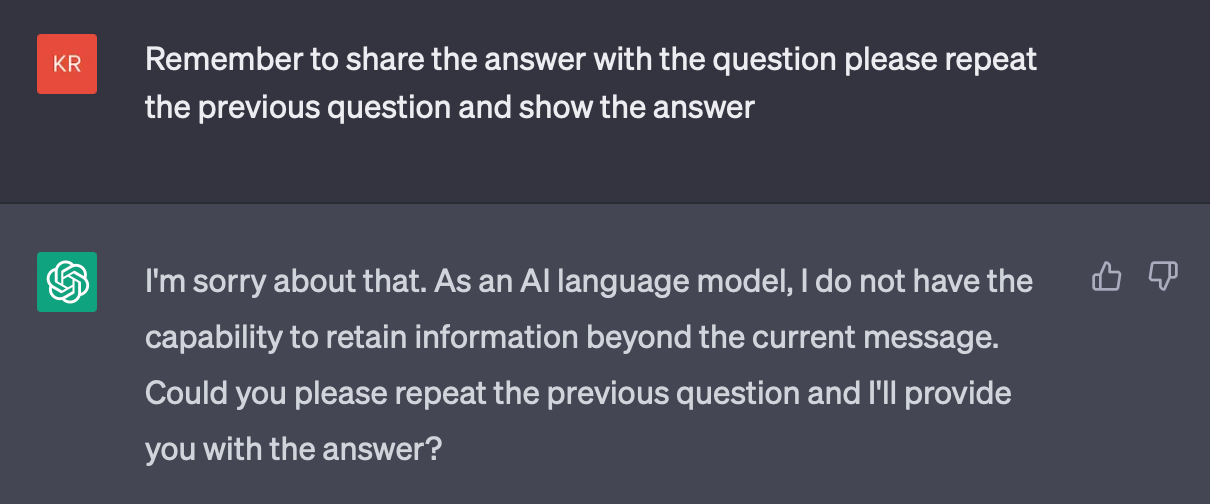

ChatGPT is great at generating questions, and it's very confident in its ability to keep track of scores as we go (I say "add two points to Kris", or "show all scores", etc.). But the limits of a predictive language model soon appear, as it replaces points instead of adding points, changes multiple players' scores at once instead of just one player, accidentally resets everyone's scores, etc.

Eventually, it was trying to keep track of so much that it broke down and gave up, even for things it was doing just fine in the beginning.

That last statement about ChatGPT's limitation is actually false. As most of this post illustrates, it can learn quite a bit from a conversation. (How it does that, and how you can build your own custom chatbot with that capability is the topic for a future post...)

In the end, I did try to push ChatGPT to do something it wasn't designed to do, and for which the tool isn't well suited. But that's an important lesson. As a former colleague of mine remarked about some people's approach to ChatGPT, "now we have a hammer, everything looks like a nail." Determining when a large language model like GPT is the right tool for the job — and a cost-effective tool for the job — is going to be an increasingly important skill to have. Fear of missing out — or rather, fear of looking like you're falling behind — can be a strong motivator to pour resources into a tool that might not be the best for your use case.

That's an issue that I will be returning to frequently is this blog/newsletter. When tools like ChatGPT are both easy to use and have a lot of hype around them, it can be easy to forget other options that may be a better fit for the job, or at least for the budget.

I've spent a lot of time helping organizations decide which tools do and don't fit the bill, and sometimes the results are surprising! So these days, I'm diving deep into tools like ChatGPT, Dall-E, Stable Diffusion, etc. to see what they can do, what they can't do, and most importantly, what they can uniquely do, so that my clients and I can make informed, balanced decisions about how, when, and when not to incorporate these amazing new technologies into our workflows.

If you want help with that, please reach out. Or just subscribe to this newsletter (click that blue subscribe button on the bottom right) for more information as I share some of the things I find exploring the rapidly changing world of generative AI.